In one word: Validity.

When working with my clients from professional accountants to bankers to IT specialists, whenever we are building new certification or updated certification exams, early on in the project execution we conduct a job analysis. Now, our focus is a little different than those in the Human Resources world who conduct job analyses, but our methods are pretty much the same. Our perspective as credentialing program consultants is to build the validity evidence for the scores associated with the given certification exam. In other words, we want to make sure that if examinees are credentialed as a result of a successful score on the exam, then those scores represent that examinees actually demonstrated the requisite knowledge/skills/attitudes that are required to perform a given job in a real-world setting.

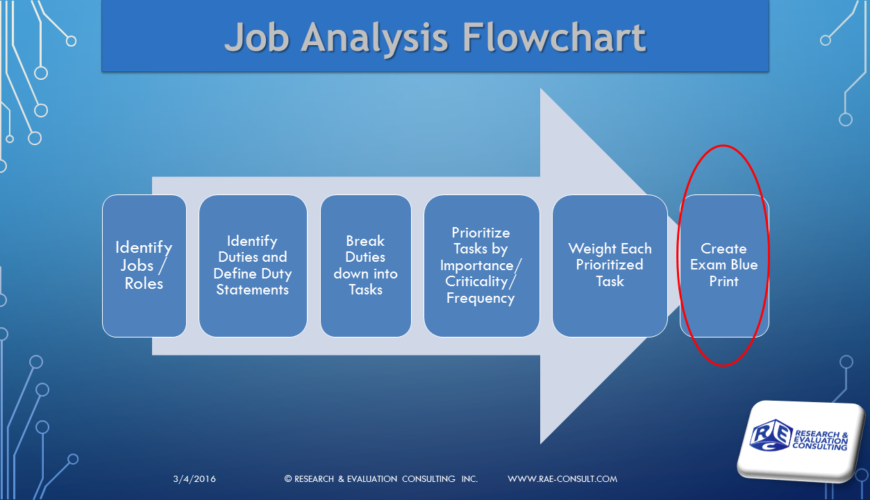

“So how does that work?” you may be asking. Our job analysis helps us identify the content domains for the exam, and from these content domains, which are weighted to mimic the real world job’s duties and tasks in the form of an exam blueprint, we develop exam questions. That’s it in a very compact nutshell, and it’s a simplification as the intent of this post is not how we do job analyses. What we are attempting to do with our job analysis is build the case for content-related validity evidence.

The Job Analysis Slide (above) we use with our clients may help you visualize the flow of a job analysis. We want to ensure that there are strong links from the job to the exam.

Why is Validity so important? Validity is the trump card in all exam development, it is the strength of the links between the job and exam content, and content-related validity is the type of validity evidence most closely tied to exam development. The Standards for Educational and Psychological Testing, our go-to resource in the educational measurement field, says essentially that “validity” refers to the degree to which evidence and theory support the interpretation of test scores, and to validate a proposed or actualised interpretation of test scores is to evaluate the claims based on the scores. For example, does a successful score on my client’s banking certificate exam mean the test-taker has demonstrated they have met the standards set by the bank to engage in the job/role of “x” in a real-world setting? If the exam was not built from a job analysis, then the exam scores would not really mean the successful examinee could do the job in the real world – the exam score would lack validity evidence to support that score inference.

The validity argument built to support the intended use of the exam scores is crucial to mitigating risk associated with the awarding of the associated credential. The organization awarding the credential, whether it be a technical certification or a completion certificate or a professional license must stand behind the meaning and intent of the credential. The only way in which they are able to confidently stand behind the credential is to have assurance that the exam content was an appropriate and representative sample of the types of things the successful examinee will have to do in the real world workplace. That assurance is based on a thorough job analysis at the front-end of the project. It is the job analysis that is the foundation of the content-related validity evidence for the inferences drawn from the exam scores.

Of course, content-related validity evidence stemming from a job analysis works really well for exams that test specific skills, where it is relatively easy to define content domains and draw representative samples from those domains to include on the exam. For more broadly defined areas of performance, content-related validity evidence is more difficult to gather, but still essential for any intended exam score inference.

Very simply, we want to create strong links from the job’s duties and tasks to the exam content, to contribute validity evidence for a credentialing exam’s score-based inferences. This is why we conduct job analyses with our clients.

There are volumes written on validity and content-related validity, but for those who want a relatively quick and solid peek at some foundational literature on the subject, please refer to:

Kane, M.T. (2006a). Content-related validity evidence. In S. M. Downing & T. M. Haladyna (Eds.), Handbook of test development (pp. 131-154). Mahwah, NJ: Lawrence Erlbaum Associates.

Kane, M.T. (2006b). Validation. In R. L. Brennan (Ed.), Educational measurement (4th ed., pp. 17-64). Westport, CT: Praeger.

Raymond, Mark, & Neustel, Sandra. (2006). Determining the content of credentialing examinations. In S. M. Downing & T. M. Haladyna (Eds.), Handbook of test development (pp. 181-224). New York, NY: Routledge.