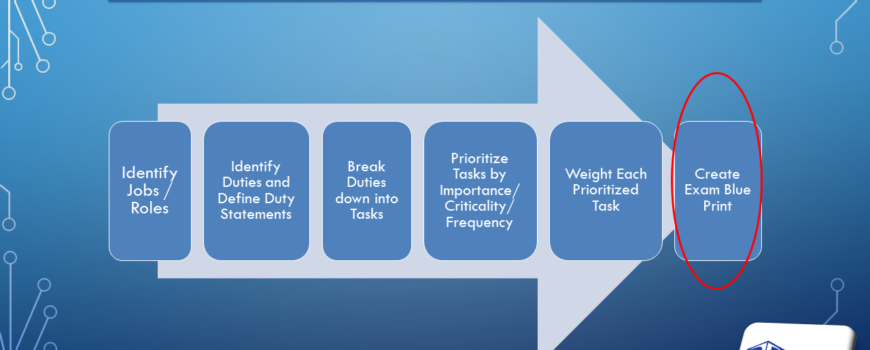

About a year ago, I had the privilege of being invited to be a panelist discussant for the Humber College – Research Analysts’ Program Spring Symposium. Each discussant was asked to comment on the following question: What new ethical concerns are being raised in market, social, and evaluation research due to the advent of AI and automation? – The points I made in my remarks are below. But after a year, do I think differently? The answer is “No.” I just returned from a trip to New York where I was working with a client that specializes in trade and economic sanctions. The client invited several subject matter experts for me to work with over two intense days. We were working on the foundational steps to building a certification exam for international sanctions specialists. I learned much from these highly educated, intelligent, seasoned experts in the field. They worked for […]